Data Trust Quotient (DTQ) organized a critical knowledge session on February 20, 2026, addressing the fundamental shift from data privacy to data trust as AI systems scale across industries. The session explored a new category of risk: not just data theft, but quiet data manipulation that can make even the smartest AI make dangerously wrong decisions.

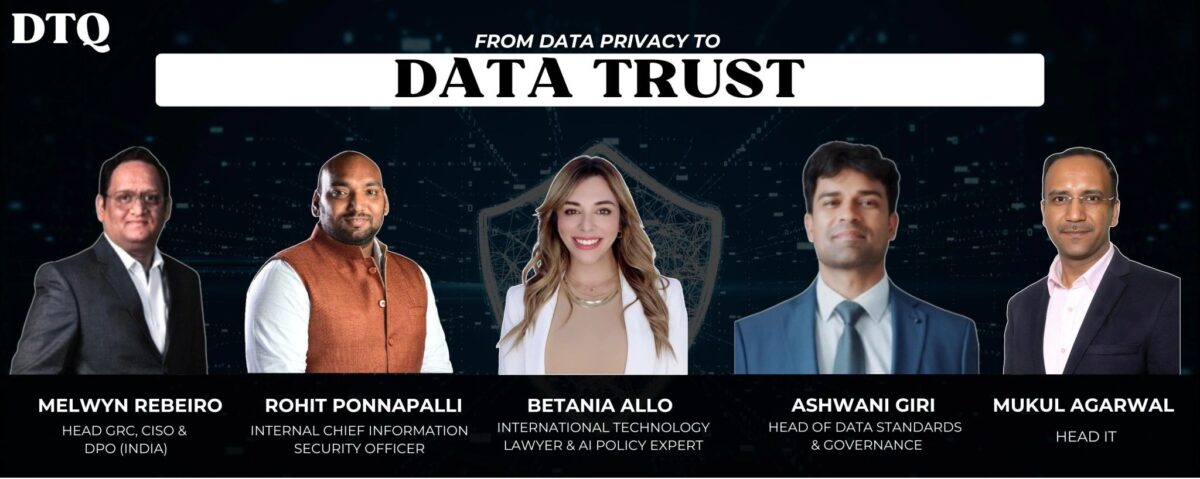

Expert Panel

The session convened four practitioners from highly regulated industries where data integrity is mission-critical:

Melwyn Rebeiro – CISO at Julius Baer, bringing extensive experience in security, risk, and compliance from ultra-regulated financial services environments, wearing both the Chief Information Security Officer and Data Protection Officer hats.

Rohit Ponnapalli – Internal CISO at Cloud4C Services, specializing in cloud security, enterprise protection, and cybersecurity for government smart city projects where real-time data integrity directly influences public infrastructure operations.

Ashwani Giri – Head of Data Standards and Governance at Zurich, working with enterprise privacy frameworks and regulators.

Mukul Agarwal – Head of IT with deep experience in IT strategy, systems, and digital transformation in the banking and financial services sector, bringing the skepticism and traceability mindset essential to financial industry operations.

Moderated by Betania Allo, international technology lawyer and AI policy expert based in Riyadh, working at the intersection of AI governance, cybersecurity, and cross-border regulatory strategy. Hosted by Data Trust (DTQ), a global platform bringing professionals together to share practices, address challenges, and co-create solutions for building stronger trust across industries.

The Shift: From Confidentiality to Verifiable Integrity

Regulators Are Changing Their Expectations

Ashwani opened by confirming the shift is happening at ground level as AI adoption increases. Organizations are preparing security documentation, having internal discussions, trying to understand what changes are required. Confidentiality was the past—now much more mature with clear understanding. The present focus: initiating discussions around veracity and verifiable data.

The Medical Prescription Analogy: Earlier, the idea was ensuring only the right people (patient and doctor) had access. Now the expectation is that nobody is altering the prescription in the background. With AI, the expectation is that data is not poisoned or drifting, that hallucinations and poisoning are prevented.

Regulators as Trust Enablers: Regulators enable trust in the social ecosystem. As AI adoption drives changes, they’re moving from simply asking access-related questions (IAM) to expecting cryptographic proof of truth, verifiable audit trails, immutable integrity checks, and mechanisms providing confidence that claimed data is actually true.

The Verification Challenge: Organizations are framing that they have bases covered, but when regulators try to verify, many cannot demonstrate it. Except for the most mature organizations with proper budgets and resourcing, most face this challenge—trying to understand changes before implementing them.

The Timeline: Similar to information security 15 years ago when organizations struggled with their own approaches, AI security faces similar challenges now. But this evolution will be much faster—5-10 years to reach maturity rather than decades.

AI Readiness Without Data Provenance Is Flying Without a Black Box

When asked if organizations can truly claim AI readiness without tracking who changed data and when, Ashwani was direct: AI readiness is definitely not there in many organizations. Provenance is absolutely essential.

The Right Thing, No Matter How Hard: Organizations should do the right thing regardless of difficulty. Provenance work is already happening in bits and pieces but not in structured format. Requirements include policies in place, dedicated teams (not stopgap arrangements), and full commitment—not pulling people just to support tasks.

The Stark Reality: AI readiness without rigorous data governance is like flying a commercial plane without a black box, without proof of provenance or source of truth. It will land nowhere.

Automation Requirements: Regulators expect automated readiness testing and red teaming (validation testing of processes) to ensure controls are designed properly and working without glitches. If automation is less than 80%, it’s a problem.

The Non-Negotiable Future: Regulators are signaling this now but will become more aggressive. Provenance will be non-negotiable. Without it, enterprises are building highly efficient black boxes.

Industry Readiness: Varied Responses to the Challenge

BFSI Leads, Others Follow at Their Own Pace

Different sectors respond differently. Banking, Financial Services, Insurance (BFSI) and healthcare—highly critical sectors—are early adopters responding well. Other industries respond at their own pace, some lagging behind, but everyone understands the importance.

The Leadership Ladder: Understanding and awareness exist. Behaviors are being introduced. Once understanding, awareness, behaviors, and ownership align, leadership emerges. AI leadership is still far away, but early adopters (especially BFSI) are doing well and having internal discussions to create right synergies.

No Choice But to Comply: Organizations understand this requirement is coming. They have no choice but to comply eventually.

The Vault Problem: Securing Contents, Not Just Containers

Mukul brought the financial services perspective with a critical observation: Skepticism is the word in BFSI. The industry doesn’t trust anything at face value unless traceability exists.

What Security Has Done Wrong: Traditional IT security secured the vault—fortifying infrastructure, ensuring nothing comes in, checking what goes out, logging and mitigating. But they haven’t verified what’s inside the vault.

The Critical Gap: Did someone with the absolute right key enter the vault and modify contents? Could be malicious intent or oversight. This is where data corruption matters.

Real-World Financial Risk: What if someone changed the interest rate for a customer’s loan for a specified period, reducing their outgo, causing damage of X amount to the financial institution, then reset it later? The change happened, reverted, damage was done, nobody noticed. This problem area lacks fair mitigation.

Insider Risk: The Blind Spot in Mature Security

Rohit emphasized this isn’t just about regulatory requirements—it’s about trust. Organizations have controls in place, but are they using those controls to monitor behavior changes or data changes?

The Maturity Imbalance: Security has organized as a fortress to prevent intrusion. Organizations are mature enough to prevent hackers from getting in. But there are fewer controls to tackle insider risk management—where data changes, data integrity, data accuracy, and data theft issues originate.

The Spending Gap: Leaving BFSI aside, other industries don’t spend much on tools. Organizations should start looking at insider threat and gaining trust from operations adapted to day-to-day life.

Zero Trust for Data: Beyond Access Control

Trust Nobody, Verify Everybody

Melwyn brought the perspective from Julius Baer’s highly regulated environment. Regulators are adopting zero trust—not trusting anybody, just verifying everybody. Whether insider or outsider, the boundary has completely changed.

The Regulatory Focus: Most regulators in India are focusing on having organizations adopt zero trust technology—trust nobody but always verify so legitimate users are the only ones accessing data.

The Evidence Requirement: If someone tries to tamper with data, at least you have logs or verifiable evidence that data has been tampered with and appropriate action can be taken.

From Access Zero Trust to Data Zero Trust

The zero trust mindset must extend directly to the data layer itself—continuously validating that information has not been altered.

The Shift Beyond Access: It’s not only about access control in zero trust, but also about the data itself. Always verify rather than trust the data. The source of data, integrity of data, and provenance of data must be verified in an irrefutable manner without tampering or malicious intent.

Why Data Is Everything: If there’s no data, there are no jobs for anyone in the room. Data is the critical aspect of decision-making and must be protected at all times.

The AI Attack Surface: Traditional cybersecurity techniques exist—encryption, hashing, salting. But with AI advent, various attacks are happening against data: injection, poisoning, and others.

The Survival Requirement: Focus must shift from zero trust access to zero trust data. Without it, organizations cannot make critical and crucial decisions and will not survive in a competitive, AI and ML-driven world.

Multi-Dimensional Accountability

Who Owns Risk When Data Is Quietly Manipulated?

In India, the trend shows most organizations still have CISOs taking care of data because they’re considered best positioned to understand both security and privacy requirements that the DPO job demands.

Different Layers of Ownership:

- Data Owner: The reference point for data

- CISO: Provides guardrails to guard data safety against malicious attacks

- DPO: Concerned only with data privacy, ensuring it’s not impacted or hampered

- Governance: Legal and compliance teams ensuring every control is covered

Shared Responsibility: Each member has their own job in the organizational chart and must do their part in protecting data. But ultimately, the board has overall responsibility and accountability to ensure whatever guardrails or safety measures allocated to data protection are in place and nothing is missing.

When Data Alteration Creates Public Safety Risks

Rohit brought critical perspective from smart city and government projects where personally identifiable information (PII) and sensitive personal data are paramount—not just for cybersecurity but for counterterrorism.

The Bio-Weapon Example: If data about blood group distribution leaked—showing a city has the highest number of O-positive blood groups—a bio-weapon could be created targeting only that blood group, causing mass casualties and impacting national reputation.

Real-Time Utility Monitoring: Smart cities don’t just hold privacy data; they monitor real-time use of public services by citizens. Traffic analysis, water management during seasonal changes, public Wi-Fi usage—all create critical data that, if tampered with, could cause chaos in city operations.

The Efficiency Question: Models exist to monitor data alteration and access, but are they efficient? Considering the scale of operations, monitoring capabilities, budget limitations, and whether they treat public safety with the same seriousness as corporate security—efficiency remains a question mark.

The Tool Gap: Industry-Specific Maturity

When it comes to infrastructure security or user security, good controls exist across industries with mature maintenance. But data access management is a question mark depending on industry.

BFSI Advantage: The Reserve Bank of India mandates database access management tools. They have controls because they have solutions. They can develop use cases, rules, and alerts for abnormalities, modifications, deletions, additions, direct database access.

The Budget Challenge: Outside BFSI, getting board approval for database access management tools requires a very strong use case or customer escalation. Without these tools, organizations rely on DB soft logs requiring manual review—cumbersome for humans to identify abnormalities and more like postmortem analysis.

Real-Time vs. Postmortem: Manual review might take six days to discover data modification. By then, damage is done. With DAM tools in place, organizations can get alerts and act in real-time with preventive and corrective controls.

Industry-Specific Reality: Controls are there but depend on how important security, integrity, and trust are to the board—determining what tools can be secured for data integrity monitoring.

Traditional Security Models Are Insufficient

Rohit identified a critical trend: Traditional data access had a system and a user or user-developed application. Controls were simple. Now there’s a third element: AI—self-adaptive, self-learning, and capable of directly accessing data.

Going Back to the Drawing Board: Everyone is returning to proper boards where they can define and design controls. The whole industry—technical people, operations teams—are validating whether traditional security controls are sufficient to handle AI operations.

The Use Case Problem: Concerns arise because controls must change for every use case. One AI tool might have eight use cases, each requiring different controls, different monitoring, different security on who’s accessing, what output is given, what data is accessed, privilege levels, potential injection attacks, and command exploitation.

Output Modification Threat: It’s not just about data modification. What if output is modified? Hackers don’t need to get into databases to modify data if they can modify output directly. This concern is getting significant attention.

The Level Question: Organizations must determine at what level they’re discussing data integrity—making it a complex, layered challenge.

Key Questions Defining Data Trust

Is Data Trust Just Rebranding Privacy?

Ashwani’s answer: Data trust is the next level of data privacy. Privacy focused on keeping data safe. The question now: Is the data you’ve kept trustable? Is somebody altering or changing it? Is it the right data collected in the first place?

End-to-End Protection: Ensuring you’re collecting data that’s right and fit for purpose, protecting it with all possible controls until consumption, and having the right pipeline protecting from end to end with proper lineage.

Traceability Requirement: You should be able to identify where trust is broken. If somebody altered data, you must be able to trace it.

The Future Parameter: Data trust is next-step beyond traditional data privacy controls—paramount for successful AI-driven organizations in the fully AI-driven era ahead.

The DPO Triad: As Rohit suggested to a DPO colleague—information security has three attributes (confidentiality, integrity, availability). For DPOs, it should be privacy, security, and trust defining overall governance.

Three Years Forward: Trusted vs. Just Compliant

Melwyn’s perspective: Trust is extremely important—going one level ahead of compliance. Compliance and trust are interchanging based on time differences.

Why Both Matter: Everyone wants to be compliant because penalties are high and heavy. Everyone wants to be trusted because without being a trusted brand or company, you’re out of business—competitors are already ahead.

The Reversal: Compliance is not driving trust. Trust is driving compliance. It’s a non-negotiable, hand-in-glove situation.

The Drinkable Water Example: Mukul provided a perfect analogy: Someone asks for water. Giving a glass of water is compliance. But was that water drinkable? That’s trust. Would you trust the person who gave drinkable water, or just take water from someone who was merely compliant?

No Shortcut to Trust: Ashwani emphasized trust cannot be bought with budget instantly. It takes time, requiring continuous good work to earn it. Trust is a real differentiator earned only by fixing things at ground level. There’s no shortcut to trust.

Compliance as Checkbox vs. Backbone

Rohit highlighted that compliance is a satisfaction factor for customers. When you want to prove you have good security controls, compliance comes into picture.

The Dangerous Trend: Compliance is becoming a checkbox, which should not be taken lightly. Compliance should be the backbone on which you build more security controls. Some organizations treat it as a checkbox saying they’re compliant, but effectiveness and efficiency remain questionable.

Priority Actions for the Next 24 Months

People, Process, Technology—In That Order

Ashwani’s Framework: Organizations must ensure right standards, policies, procedures, and mandates are in place. Identify the right people for the work and agree on RACI matrix (who’s responsible, accountable, consulted, informed) defining roles clearly.

Ground framework first. Other things are technology-related. Fixing the people part—the human factor—is always most important. Once you fix the human vector, everything else comes with much more ease.

Mindset and Culture Change

Melwyn’s Priority: The mindset must change when discussing privacy, data security, and integrity. Culture has to be there. Without the right mindset, culture, ethos, and ethics to govern, even the best controls, equipment, or security will not work.

The right mindset is the key to success.

Access Monitoring and Traceability

Rohit’s Focus: Culture is a never-ending job through awareness sessions and phishing simulations—always 10-20% violating despite efforts. But purely for trust, organizations have enough controls knowing who has access to systems.

Three Critical Questions: Focus on controls understanding who has access to systems or data, who is modifying data, and what is being modified. Answer these three questions and trust can be easily built.

Explainable AI with Human in the Loop

Mukul’s Guidance: Many organizations live in the hype of deploying AI and trusting their data with AI. There must be a human in the loop, and AI must be explainable.

Explainable AI with human in the loop is the keyword when trusting data with AI models. At least jobs are safe with this explanation—people are still needed to validate.

Conclusion: Trust Cannot Be Bought, Only Earned

The session revealed unanimous agreement: The future belongs to organizations with the most trusted data, not just the most data or the most advanced AI.

Trust is the cornerstone of AI-driven ecosystems. Provenance is non-negotiable. Zero trust must extend from access control to the data layer itself. Accountability is multi-dimensional across boards, executive leadership, technology teams, and legal compliance.

As India accelerates its AI ambitions (hosting the AI Summit during this session), embedding verifiable integrity at scale becomes essential—not only for foundational institutional credibility across sectors but for defining long-term leadership.

Key principles emerged: Do the right thing no matter how hard. Fix the human factor first. Treat compliance as backbone, not checkbox. Remember there’s no shortcut to trust—it must be earned through continuous good work fixing things at ground level.

The shift from data privacy to data trust represents the next evolution in data governance—moving from protecting data from unauthorized access to ensuring data remains true, accurate, and verifiable throughout its lifecycle in AI-driven systems.

This Data Trust Knowledge Session provided essential frameworks for organizations navigating the evolution from data privacy to data trust. Expert panel: Melwyn Rebeiro (Julius Baer), Rohit Ponnapalli (Cloud4C Services), Ashwani Giri (Zurich), and Mukul Agarwal (BFSI sector). Moderated by Betania Allo.